It took about 3 weeks, but I've now completely re-written the backend for War Worlds and moved it off App Engine. I've been running for about a month now on Google Compute Engine and the results are in: it's fantastic (yes, I didn't move far from Google's Cloud... I'll post reasons as to why I chose Compute Engine over, say, EC2 or a VPS in a future blog post. Suffice to say, comparing cloud providers is actually very difficult).

The biggest win for me has been in terms of cost, and that's what I want to compare today. To begin with, a quick breakdown of how the game is designed, so you can see what the typical usage patterns are.

Backend Design

The War Worlds backend is basically a REST-like web application that provides an interface to a data store. The world is made up of "sectors" and each sector has a collection of stars. Each star is (mostly) a self-contained ecosystem. On a star you have multiple planets, on a planet (possibly) one colony, on a colony you have multiple buildings. Additionally, you can have fleets of ships around a star. Everything in the game takes a certain amount of "real-time" to complete. Ships take a few hours to travel between stars, buildings take a few hours to complete and so on. [The Getting Started guide has a good overview of the game from a user's point of view, if you're not familar].

If there is nobody actually playing the game, then the server sits (largely) idle. When you pull up a star in the game client, the server runs a simulation of everything that has happened on the star since the last simulation (calculating things like population growth, build progress and so on). When it runs a simulation, there are certain future events which may need to be triggered (such as when a build completes) that the game saves off to be triggered at the appropriate time.

Main Problems

There were a few things in the App Engine backend which were not ideal. Some were my own fault, some were due to limitations of App Engine itself.

The first was entirely my own fault. I had originally built the backend in Python, and the frontend - being an Android app - in Java. This posed a problem, because it made it impossible to share code between the client and server. I couldn't run the same simulation on the client as I did on the server, so in order to make things on the client feel responsive I basically had to duplicate the simulation code, once in Python and once in Java. This resulted in quite a few out-of-sync bugs where the client thought one thing was happening but the server thought another.

Additionally, I was using the Task Queue to schedule the future events that I mentioned above (such as build-complete, fleet-move-complete, etc). This was a problem because App Engine provides no (simple) way to cancel future tasks. So if you build something and it's queued for 2 hours from now, then you re-adjust your colony's focus so that the build should only take 1 hour, the game would re-queue the "build-complete" event in an 1 hour, and the one scheduled for two hours would still run, realise it had nothing to do and just exit.

Finally, App Engine has a hard 60 second limit on frontend requests. For the most part, this was fine. But certain requests started to take longer than 60 seconds, particularly when empires started getting larger. Collecting taxes requires simulating every star in your empire and when you've got 200 stars, that can take a while (more on this later, but App Engine seems rather slow for CPU-bound tasks). This mean I needed a complex system involving tasks and callbacks just to do something like collect taxes: the user would press the "Collect Taxes" button, which would fire off a task in the backend. When the task was complete, it would send a notification back to the client telling it to refresh the empire (with the new tax value). Having to wait 1 minute+ just to get the taxes from your colonies was quite annoying.

Costs Start Skyrocketing

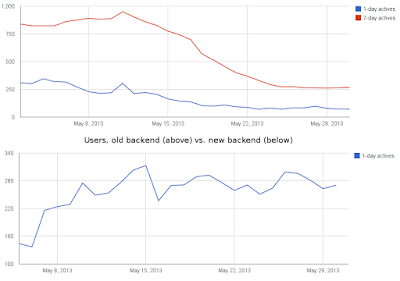

For a while, the game was doing alright. It was costing me about $10 per week to run it and I didn't mind that I was losing money (it's always been a labour of love anyway). But around the end of March, usage started to climb. In a week, I had double the daily active users and by mid-April or so, I was seeing about 300 daily active users and about 2 requests per second to the server.

It doesn't seem like much, but my costs had skyrocketed. It went from about $10 per week to around $20 per day. This was becoming unsustainable, for an app that was making $100 per month, no labour of love was going to sustain those kinds of loses. I either had to dramatically increase revenue (I didn't want to do that) or drastically reduce costs. Looking at my billing data in App Engine, a couple of things stood out:

The three biggest costs were frontend instance hours, data store reads and data store writes. Unfortunatly, I couldn't see a way to reduce any of those. I was already using memcache to it's fullest, but in a game like this, around half of requests are mutate requests, which makes caching in general not that useful (it was still getting about 60% hitrate in memcache which I thought was pretty good).

Frontend instance hours is something I tried tweaking a few settings for. For eample, a larger instance costs more per hour, but because requests should complete faster, then theoretically fewer instances should be required. It ended up being a bit of a wash (and the smallest instances were too slow by far anyway). I also tried switched to reserved instances, which reduce costs by about 30% (from around $9 per day to around $6) but with usage still climbing, that saving was quickly wiped out again.

I had to do something, the longer I left it, the more money I was losing (again, it wasn't ever really breaking the bank, but a over a hundred dollars a week is something the wife starts to notice). Eventually, I decided that App Engine just wasn't worth the cost and I decided to port away.

New Design

I settled on two main requirements for the new design:

- It should be portable from one provider to another, and

- It should be written in Java, so that I could share code between the client and server

So the basic design I came up with was a server built on the Jetty servlet container, MySQL for the database and Nginx for the frontend (to handle HTTPS and the like). MySQL was really just chosen because I was familiar with it, and it has pretty good tooling. Communication between client and server was always based off protocol buffers and I decided the easiest way to build the new server would be to keep the exact same URL scheme and data interface, just switch out the "base" URL. I ended up adding a "realm" feature to the game client so that players could choose to join the "Alpha" realm (i.e. the old App Engine backend) or the "Beta" realm (the new one I developed) with only minimal changes required in the game client.

It took about three weeks of nights and weekends to get the server re-written in Java and running on Jetty (plus a few more weeks to iron out all the bugs). The changes to the client were relatively minimal, most of the code changes on the client were to allow it to switch between the "Alpha" and "Beta" servers. Re-writing the server in Java would also allow me, in time, to perform more of the simulation on the client, only simulating on the server when actually required, but for the initial version the new server did basically the same work as the old server did.

After running the new server for about a month, the user count is now approximately what it was on the App Engine backend, performance is much better, and costs are a fraction of what they were: around $230 per month, down from around $600. The new server is running on a single n1-highcpu-4-d instance of Google Compute Engine.

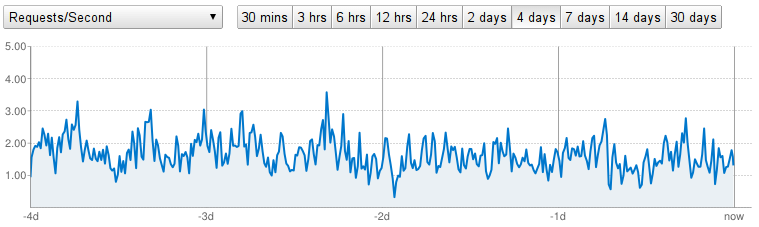

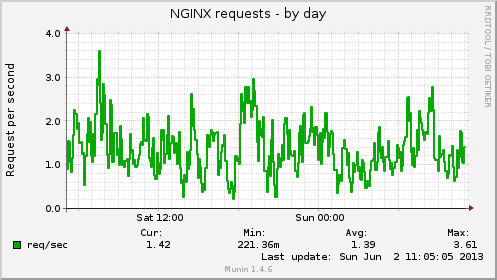

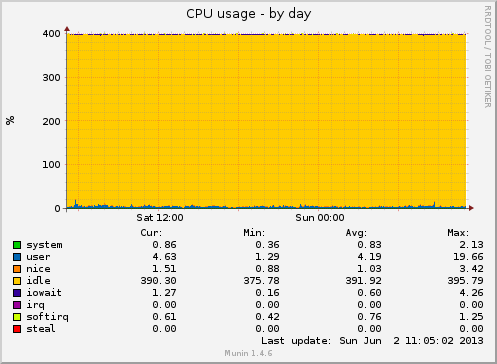

As you can see from the graphs above, we're doing approximately the same ~2 requests a second (slightly lower, which is likely due to the better way future events are queued). But CPU usage is barely above 1%, I/O and network (not shown above) are similarly around 1% of what the instance allows.

So I'm paying less than half as much as I was before, and I can easily sustain 10-20x times the usage without incurring any extra charges.

Comparison of Performance

As always, real-world performance comparisons are hard and this is not entirely apples-apples. The new server is written Java instead of Python, talks to a MySQL database instead of App Engine's HRD and so on. But from a user's point of view, both servers are exactly identical -- that is, they provide the same functionality and the same interface.

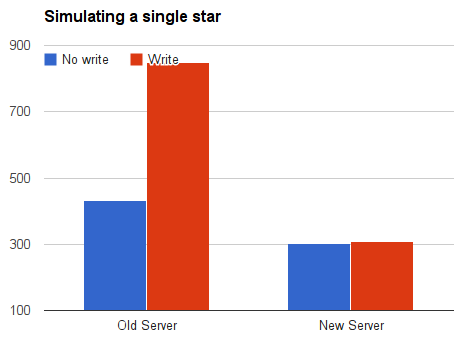

There are a few things which are (somewhat) directly comparable. Running the simulation of a single star is something that happens quite a lot, and is fairly easy to compare.

The above graph shows the difference in performance between simulating stars in the old server and the new server. The blue column shows the time taken (in milliseconds) just to simulate the star, without storing the result back in the data store. The red column is the time taken to simulate a star and store the results back in the data store (these times also include round-trip network time to the server, which is about 150ms for me, located as I am in Australia). In the old server, I was using the App Engine HRD which uses the Paxos algorithm to ensure data consistency in the event of failures. The problem with Paxos is it has inherit performance trade offs that make writes extremely expensive. In a game like War Worlds, where about half of all requests end up mutating data, you can imagine how this can hurt performance.

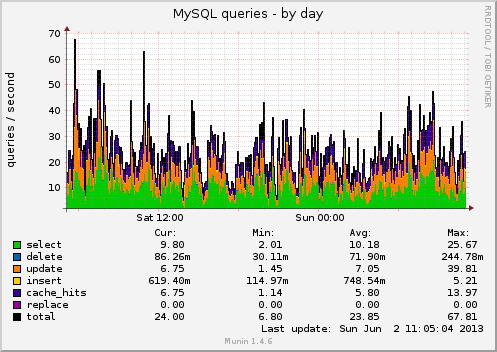

Above is the graph of SQL queries to the database. As you can see, it's about 2:1 SELECTs to UPDATEs, but I'd say that's mostly because I've done absolutely no caching at all (so for example, every request requires a query the "session" table to get the user's empire ID and so on which could easily be cached to dramatically reduce the amount of SELECTs required).

What I miss

There's a couple of things I miss about App Engine. In particular, the ease-of-deployment and automatic data integrity management. The way you develop on the local instance, then run a single command to upload it to the server and switch to the new code with no downtime was great. I basically ended up writing an ant script that compiled the server, zipped it up and copied it to a directory on the server, then another bash script on the server which I could run that unzipped the files, and swapped out the live server with no (or almost no) downtime. It's not that hard, but on App Engine you just don't think about it.

Similarly with backups: on App Engine, data integrity is gauranteed so backups are really not required. I ended up coming up with a simple cron task that takes a full dump of the database every hour and copies it to a "permanent" storage device. In the event of catastrophic failure, I should be able to spin up a new instance, copy my image to it and get the last hour's backup copied over in fairly short order. The main issue is the fact that I sleep for ~8 hours a day and work for ~8 hours a day, meaning it could be some time between when a problem occurs and I'm aware of it.

Conclusion

At the end of the day, I still really like App Engine (and I will continue to use it for things like this blog). But it's clearly not well-suited to all workloads and unless you've got cash to burn, you do need to think about the typical workload of your application and whether it's actually suitable or not. If you have heavy CPU requirements or a high write:read ratio to the data store, then App Engine may not be the best choice.